Using Test Results For CI Optimisation

All modern tech teams use CI as part of their deployment process. Various SaaS providers and testing frameworks exist to allow CI processes to be constructed in minutes.

This article addresses looking at CI results historically to highlight problem areas in CI builds.

Once you have a functioning CI process, there is a number of common problems can affect a project:

- The number of tests increases, leading to slower build times.

- Missing external mocks, causing flaky behaviour.

- Missing dependency lock files causes a build to fail unexpectedly not related to code changes.

- Previously skipped tests not being re-activated, lowering test coverage.

As projects age, previous build behaviour will be lost and resolving issues such as the above can be time-consuming.

Recording historical test details can help highlight CI problems and allow them to be addressed before they affect an engineer's workflow.

Test Results

Most test frameworks allow exporting results in addition to displaying them to the terminal. Due to Java's popularity and its testing framework JUnit, the JUnit XML format has become the universally accepted format for reporting on test results.

Here are some links that document how to enable XML output on modern testing tools:

Configuration

Let's cover some simple setup examples to show how easy it is to start generating test reports. After installing the required dependencies from the links above, the report generation is enabled by adjusting the arguments when executing your test command.

For example, using Python and its two popular test frameworks we can enable reporting as follows:

With pytest:

pytest --junit-xml=report.xml

With unittest:

python -m junitxml.main -s src -p *_test.py

Even with perl, the setup is still relativity simple:

PERL_TEST_HARNESS_DUMP_TAP=./test_output prove --timer --formatter TAP::Formatter::JUnit src

Integration with Circle CI

There are many continuous integration SaaS providers to choose from, I'm liking how Circle CI gives individual test details and insights into your builds on top of the usual features you would expect from a CI tool.

To see your test details from their UI you need to set up two extra steps in your CI config:

- Creating a JUnit XML file of your tests results

- Informing CircleCI of the test results location for its processing.

Full information on configuring your project can be found on their extensive docs, but we will just cover configuration regarding test results.

Once you have authorised Circle CI to access your project repositories, create a file named .circleci/config.yml at the root of your project.

Orbs to the rescue

Circle CI supports "orbs" to do the majority of the configuration for us. Orbs are prebuilt sections of functionality that make your CI setup simpler and easier to maintain.

Here is a very basic example that will upload your test results:

version: 2.1

orbs:

python: circleci/python@1.5.0

workflows:

main:

jobs:

- python/test:

test-tool: pytest

test-tool-args: '--junitxml=test-report/pytest.xml'

version: '3.10.4'

The lines:

orbs:

python: circleci/python@1.5.0

Enables the Python orb and then the lines:

- python/test:

test-tool: pytest

test-tool-args: '--junitxml=test-report/pytest.xml'

version: '3.10.4'

Will run our unit tests using pytest and save the results to the file test-report/pytest.xml and finally, it will upload this file to CircleCI for processing.

Custom python setup

The above config is super simple, the python orb does offer more configuration options to tailor it to your needs. We will now cover a more involved setup.

Your CI process will contain a number of steps, the important step related to exporting your test results is store_test_results, this just needs a path to your generated JUnit XML. Lets look at a full python example:

version: 2.1

orbs:

python: circleci/python@1.5.0

jobs:

build_unittest:

executor:

name: python/default

tag: '3.10.4'

steps:

- checkout

- python/install-packages:

pkg-manager: pip

- run:

name: test

command: |

python -m junitxml.main -s src -p *_test.py

- store_test_results:

path: junit.xml

workflows:

main:

jobs:

- build_unittest

In the config above we perform the following steps:

- Use a python image

- Checkout code from repo.

- Install dependencies using pip.

- Run unit tests using the junitxml unit test wrapper, this creates a

junit.xmlfile in the root directory of the project. - Upload test results.

We are still using the Orb here for the installation of dependencies as that's fairly standard, but now running a custom unit test step. As the orb does not support the junitxml wrapper we need.

Viewing results

I've created two GitHub project examples that have their CI setup using the above method to give example test results:

Both of these projects have unit tests configured including one that will randomly fail and another that will take 10 seconds to run to show some examples of results.

Although anyone can view the CI results on open-source projects, the insights CircleCI provides are only available to the project's owner(s), so I have included screenshots of their results below.

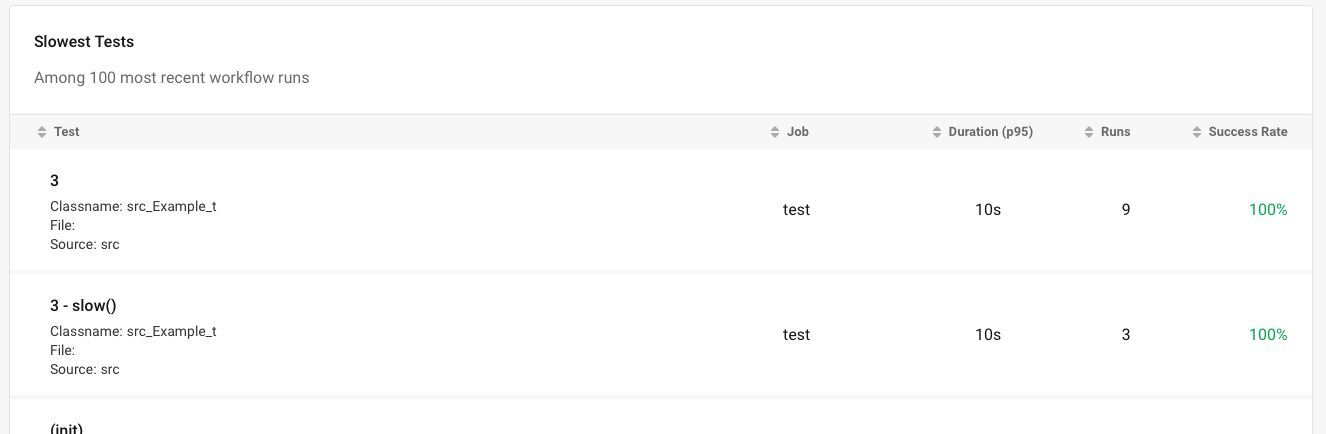

We can clearly see that our 10 second test takes the longest amount of time to run in the following screenshot:

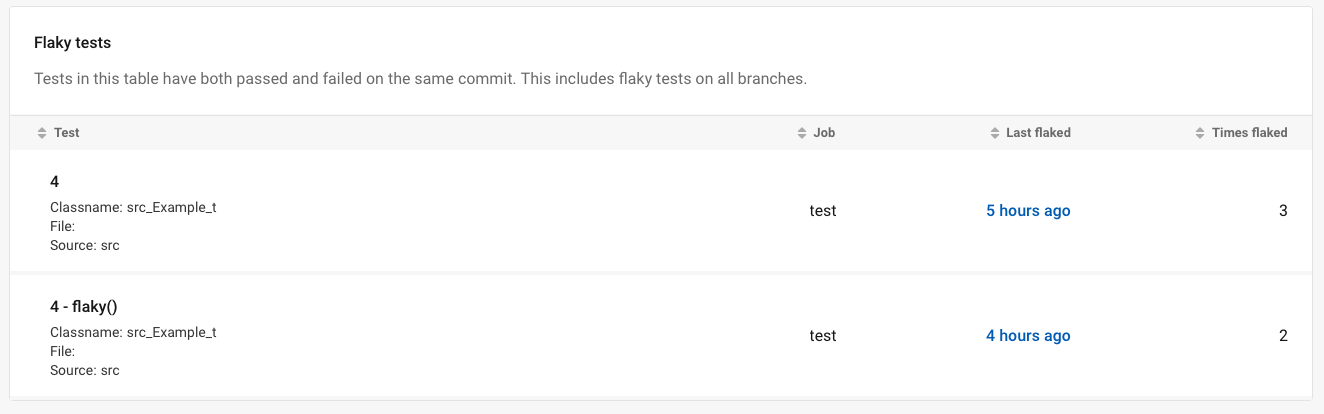

We can also see that our randomly failing test has been marked as flaky in the following screenshot:

Consider implementing test results in your projects to understand your CI times and improve visibility on potential problem areas of your codebase.

Even with the CircleCI features above, there is so much more that could be gathered and reported on using CI builds meta data, I'm hopeful that we will see more CI providers expanding their features in the future to offer more functionality in this area.

Last updated: 26/02/2022